AI has evolved from a science fiction concept to a fundamental pillar of modern technology. It’s transforming industries, particularly in financial services, where the balance between progress and risk is more precarious than ever. AI’s ability to replicate human intelligence is opening up new efficiencies and insights once thought impossible. However, like any transformative technology, AI also has its darker side. Along with its advantages come significant risks, making it crucial to approach its development and use with care and responsibility.

The Bright Promise of AI

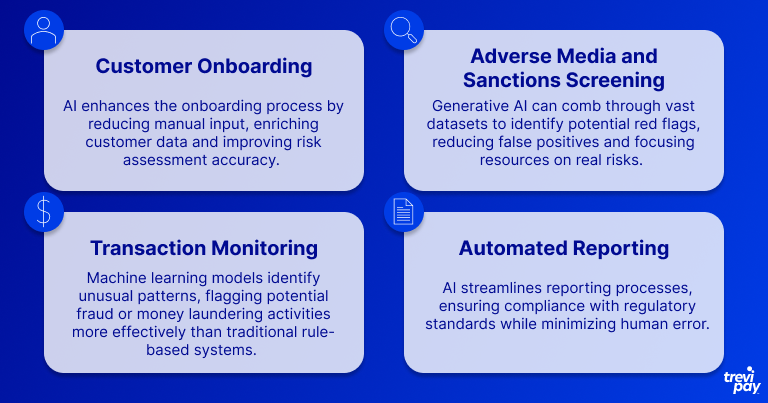

In the world of financial services, AI is more than a buzzword—it’s a game-changer. Consider the customer onboarding process, where AI quickly verifies identities, amend customer/company data and identifies potential risks in seconds. Or review media mentions and potential matches on a sanctions list, tedious tasks for humans, but one that AI can learn to support with increasing accuracy, flagging relevant issues while cutting down on false positives.

Transaction monitoring, another area transformed by AI, now benefits from machine learning models that can spot unusual patterns in real time, providing significant fraud prevention benefits. Even complex reporting tasks, long a burden on teams, can benefit from AI automation, reducing errors and freeing up valuable time to work on higher value tasks.

The result? A more efficient, effective system that not only saves money but also makes it easier to stay ahead of evolving threats.

The Dark Side of AI

But for all its promise, AI has a dark side. The same technology that helps prevent fraud can be used to commit it. Cybercriminals leverage AI to infiltrate financial systems, create hyper-realistic deepfakes for extortion and even orchestrate market manipulations.

The risks don’t stop there. AI-driven schemes have been linked to acts of corporate espionage, online account takeovers and even aiding terror attacks. And as AI grows more sophisticated, we’re seeing the emergence of chilling new crime types—data poisoning, burglar bots and forgery-as-a-service, to name a few. These aren’t isolated incidents; they’re part of a growing trend of “crime as a service”.

Significant threats of AI:

- Massive Fraud: AI-driven frauds exploit vulnerabilities in financial systems, enabling large-scale scams.

- Access to International Financial Systems: AI tools can manipulate or infiltrate sensitive systems, potentially causing widespread disruption.

- Terror Attacks and Deepfakes: Generative AI creates realistic but fake media, used for extortion, ransom or misinformation campaigns.

- Corporate Espionage: AI aids in stealing sensitive data, giving competitors an unfair advantage.

- Online Account Takeovers: Cybercriminals use AI to hijack personal and corporate accounts.

The Challenge of Explainability

A major challenge with AI is its lack of transparency. The concept of “explainability” is critical in AI development and deployment and it means being able to explain how AI works or how and why an AI model generates decisions or outcomes. Stakeholders must understand:

- How AI Works: The mechanisms driving AI decisions.

- Why It Generates Specific Outcomes: The rationale behind predictions or actions.

This is especially vital in regulated sectors, like finance, where compliance with laws requires clear accountability.

The Role of Regulation: EU’s AI Act

Recognizing these challenges, governments are stepping in to regulate AI. The EU has taken a pioneering step with its AI Act, the first global framework designed to ensure AI is used responsibly. This legislation aims to address the risks posed by AI while setting clear rules for its deployment in high-stakes applications.

While the Act is now in effect, the majority of its provisions will become enforceable starting August 2026 across EU’s member states. Its measures include banning practices deemed too dangerous, setting strict standards for high-risk AI systems and creating a governance structure to ensure compliance.

This regulation could serve as a global benchmark, showing how we can foster innovation without compromising on safety or accountability.

Proposed Rules Include:

- Addressing AI-specific risks.

- Prohibiting unacceptable AI practices.

- Listing high-risk applications with stringent requirements.

- Mandating conformity assessments.

- Establishing governance structures for enforcement.

TreviPay: Harnessing AI for Good

At TreviPay, our compliance and risk teams are harnessing the power of AI to fight fraud, achieve regulatory compliance standards, and protect customers. By combining generative AI models with advanced machine learning techniques, our compliance and risk teams tackle challenges like bot detection, behavioral biometrics and transaction validation.

These tools don’t only identify suspicious activity—they help prevent it, ensuring the financial ecosystem remains secure. In doing so, TreviPay exemplifies how AI can be used responsibly, balancing cutting-edge innovation with a commitment to trust and safety.

Walking a Tightrope

AI’s simulation of human intelligence holds immense promise but comes with risks. As industries like financial services increasingly rely on AI, maintaining a balance between innovation and regulation is crucial. Institutions must address AI’s dark side while embracing explainability, transparency and global frameworks such as the EU AI Act.

AI’s future lies in building systems that are not only efficient but also ethical and accountable, ensuring it serves humanity’s best interests.